Cross-Modality Domain Adaptation for Medical Image Segmentation - 2021¶

👋 See the 2022 crossMoDA edition!¶

Announcements¶

After requests, we have postponed the start of the testing phase by 10 days.¶

NVIDIA sponsors one NVIDIA RTX 3090 (24 GB - retail price: 1500$) for the challenge winner!¶

Challenge participants will have the opportunity to submit their methods as part of the post-conference MICCAI BrainLes¶

2021 proceedings.

Aim¶

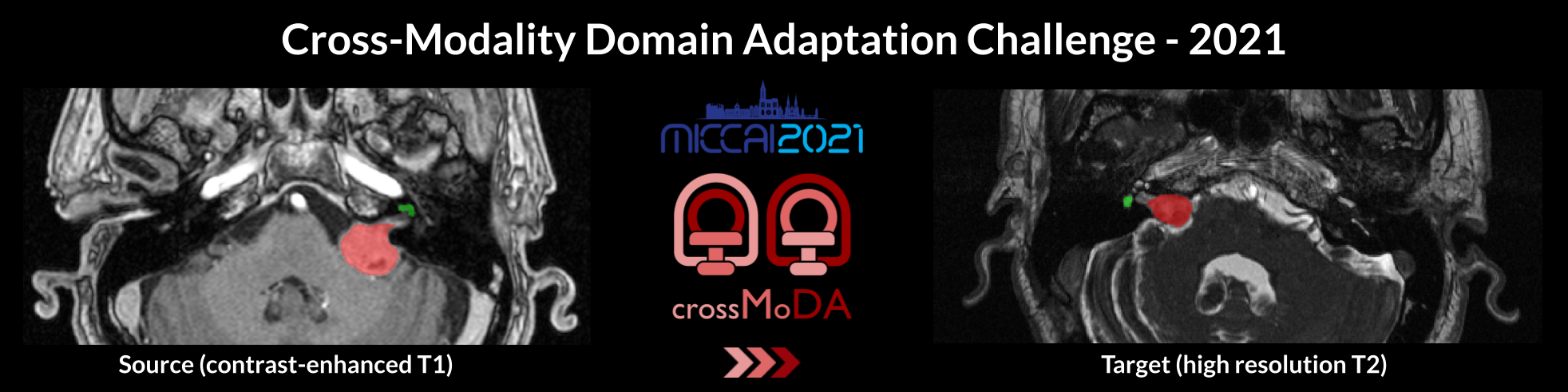

This challenge proposes the first medical imaging benchmark of unsupervised cross-modality Domain Adaptation approaches (from contrast-enhanced T1 to high-resolution T2).¶

Motivation¶

Domain Adaptation (DA) has recently raised strong interests in the medical imaging community. By encouraging algorithms to be robust to unseen situations or different input data domains, Domain Adaptation improves the applicability of machine learning approaches to various clinical settings. While a large variety of DA techniques has been proposed for image segmentation, most of these techniques have been validated either on private datasets or on small publicly available datasets. Moreover, these datasets mostly address single-class problems. To tackle these limitations, the crossMoDA challenge introduces the first large and multi-class dataset for unsupervised cross-modality Domain Adaptation.

Task¶

The goal of the challenge is to segment two key brain structures involved in the follow-up and treatment planning of vestibular schwannoma (VS): the tumour and the cochlea. While contrast-enhanced T1 (ceT1) Magnetic Resonance Imaging (MRI) scans are commonly used for VS segmentation, recent work has demonstrated that high-resolution T2 (hrT2) imaging could be a reliable, safer, and lower-cost alternative to ceT1. For these reasons, we propose an unsupervised cross-modality challenge (from ceT1 to hrT2) that aims to automatically perform VS and cochlea segmentation on hrT2 scans. The training source and target sets are respectively unpaired annotated ceT1 and non-annotated hrT2 scans.

Evaluation¶

Classical semantic segmentation metrics, in this case, the Dice Score (DSC) and the Average Symmetric Surface Distance(ASSD), will be used to assess different aspects of the performance of the region of interest. These metrics are implemented here. The metrics (DSC, ASSD) were chosen because of their simplicity, their popularity, their rank stability, and their ability to assess the accuracy of the predictions.

Participating teams are ranked for each target testing subject, for each evaluated region (i.e., VS and cochlea), and for each measure (i.e., DSC and ASSD). The final ranking score for each team is then calculated by firstly averaging across all these individual rankings for each patient (i.e., Cumulative Rank), and then averaging these cumulative ranks across all patients for each participating team.

Rules¶

- No additional data is allowed, including the data released on TCIA

and pre-trained models.

The use of a generic brain atlas is tolerated as long as its use is made clear and justified.

Example of tolerated use cases:

- Spatial normalisation to MNI space

- Use of classical single-atlas based tools (e.g., SPM)

Example of cases that are not allowed:

- Multi-atlas registration based approaches in the target domain - No additional annotations are allowed.

- Models can be adapted (trained) on the target domain (using the provided target training set) in an unsupervised way, i.e. without labels.

- The participant teams will be required to release their training and testing code and explain how they fine-tuned their hyper-parameters. Note that the code can be shared with the organizers only as a way to verify validity, and if needed, NDAs can be signed.

- The top 3 ranked teams will be required to submit their training and testing codes in a docker container for verification after the challenge submission deadline in order to ensure that the challenge rules have been respected.

Feel free to contact us using the forum (preferred option) or directly with questions.

Timeline¶

| 26th March 2021: | Registration is open! |

| 5th April 2021: | Release of the training and validation data (see data page) |

| 5th May 2021: |

Start of the validation period. Participants are invited to submit their predictions on the validation dataset (see submission page) |

Start of the evaluation period. Participants are

invited to submit their predictions on the testing dataset (see instructions

page) |

|

| End of the evaluation period | |

| 27th September 2021: | Challenge results are announced at MICCAI 2021 |

| 30th November 2021: | Participants are invited to submit their methods to the MICCAI 2021 BrainLes Workshop. |

| December 2021: | Submission of a joint manuscript summarizing the results of the challenge to a high-impact journal in the field (e.g., TMI, MedIA) |

Sponsors¶

¶

¶

NVIDIA sponsors one NVIDIA RTX 3090 (24 GB - retail price: 1500$) for the challenge winner.¶

BANA

(British Acoustic Neuroma Association) sponsors a cash prize of

£100.

BANA

(British Acoustic Neuroma Association) sponsors a cash prize of

£100.